preamble

Under the background of rapid development of intelligent technology, image data acquisition and processing has gradually become one of the key technologies in the fields of automatic driving, industry, etc. Therefore, high-quality image data acquisition and algorithm integration testing have become the key to ensure the performance and reliability of the system. As technology continues to advance, the growing demand for image data acquisition, processing and analysis requires not only high-performance camera hardware, but also the ability to efficiently integrate and test various algorithms.

In response to these challenges, we have explored and proposed a multi-source camera data acquisition and algorithm integration test solution, which can meet the diverse needs of image acquisition and algorithm testing in different application scenarios, and ensure the accuracy of data and the effectiveness of the algorithm.

Figure 1: Basic structure of the camera

Camera Composition

A camera generally consists of a lens, an image sensor, an image signal processor (ISP), and an interface.

- Image sensors convert photons into telecommunication signals, and the number and size of their pixels have a direct impact on image clarity and light-sensitive capability, making them a central factor in image quality.

- The ISP (Image Signal Processor) converts the raw data captured by the sensor into high-quality digital images and performs processes such as de-mosaicing, white balance, and color correction to ensure usability of the output.

- The interface serves as an information exchange channel between the camera and external devices, responsible for data transfer, power supply and control signal output, and is also indispensable in the overall system operation.

In practical applications, common camera types include USB interface cameras, Ethernet interface cameras, and in-vehicle Fakra cameras. Each type of camera has different characteristics and application scenarios. For example, USB driverless cameras are known for their plug-and-play and portability; Ethernet interface cameras specialize in high frame rates and network connectivity; and in-vehicle cameras emphasize high stability and long-distance transmission capabilities.

Since different application scenarios (e.g., automated driving, industrial inspection, traffic monitoring, etc.) have different needs for image resolution, frame rate, transmission distance, and deployment of algorithms, different types of cameras are needed to meet the diverse needs of data acquisition and algorithm testing.

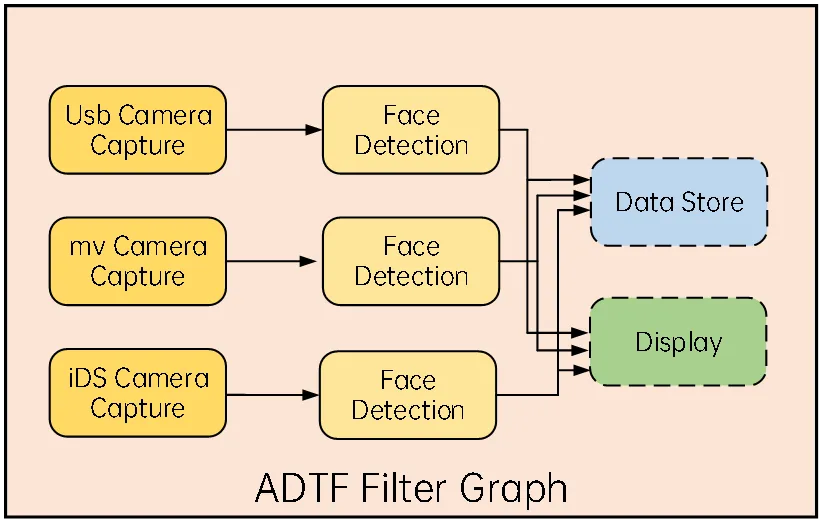

Multi-source Camera Acquisition and Testing Solution

In the process of multi-source camera integration, we usually configure key parameters such as resolution and frame rate for each type of camera, and then integrate the images from different sources into the algorithmic process for real-time testing and analysis to ensure that the images from all sources can meet the expected quality.

In practice, each type of camera usually has a corresponding SDK package, such as the V4L2 API for USB driverless cameras, but the following problems are often encountered:

- How to effectively integrate various cameras and perform data acquisition and real-time visualization at the same time?

- How quickly can multiple devices of each camera be driven and captured simultaneously?

- How do you time stamp different types of cameras correctly for in-vehicle applications?

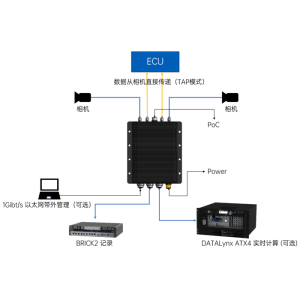

To meet these challenges, we have integrated the Acer BRICKplus/BRICK2 hardware acquisition platform with the ADTF software framework, allowing multi-source cameras to collaborate efficiently within a single system and quickly complete data acquisition and algorithm validation in a test environment.

Figure 2: BRICKplus/BRICK2

Figure 3: ADTF Software

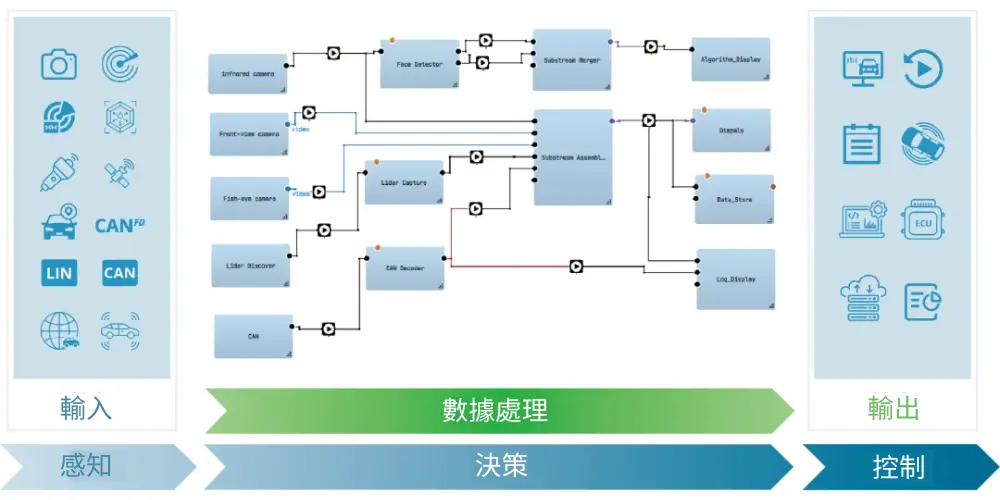

In terms of software, a modular plug-in design is adopted, integrating SDKs for USB driverless cameras, industrial face array cameras and iDS Ethernet cameras, as well as interface packaging and test result output for algorithmic components.

Figure 4: Software Framework

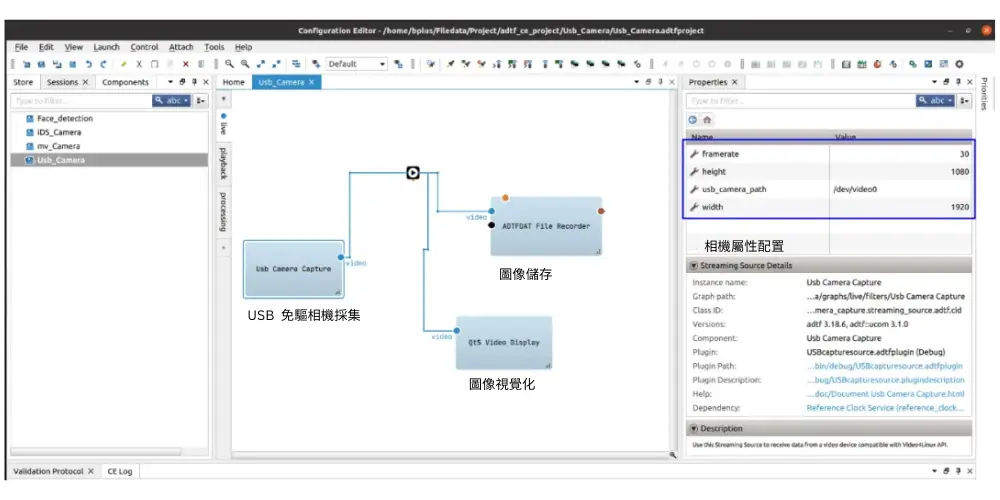

For different types of sensors, the camera acquisition process often includes steps such as turning on the device, setting parameters and formats, requesting a memory buffer, driving the video stream, image loop acquisition, etc., which can be abstracted into camera components through modular design. This architecture allows developers to quickly integrate multiple cameras in a visual way and simultaneously perform data storage or subsequent visualization. To ensure the synchronization of multiple cameras on the time axis, hardware or software time synchronization mechanisms (e.g., triggered signals or PTP) can also be used, which, together with the configuration of lens correction and multi-view geometry parameters, can significantly improve the accuracy of the algorithmic inference.

Figure 5: Camera Acquisition Project

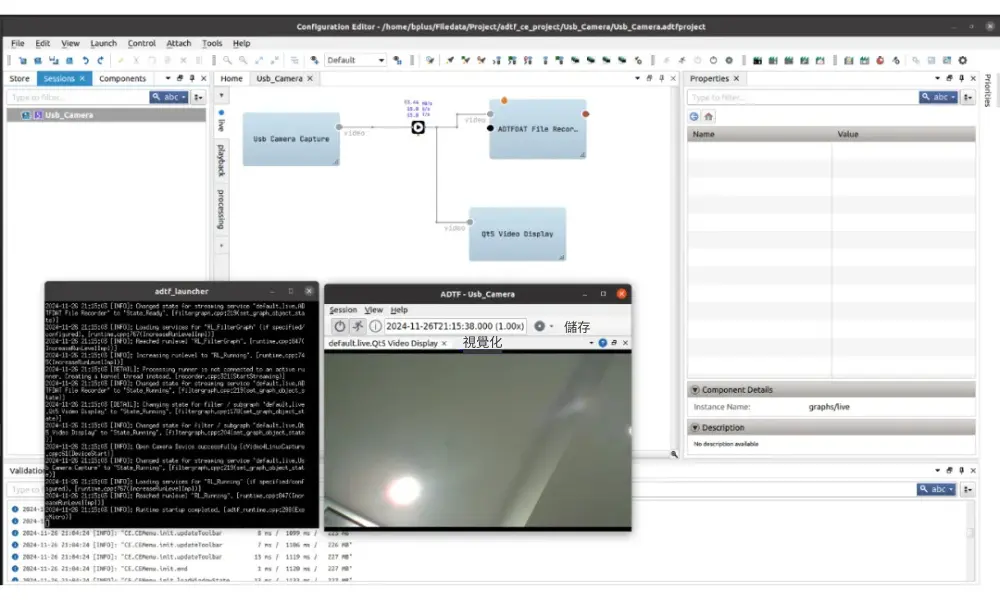

Figure 6: Operation of the camera acquisition project

Application Case Sharing

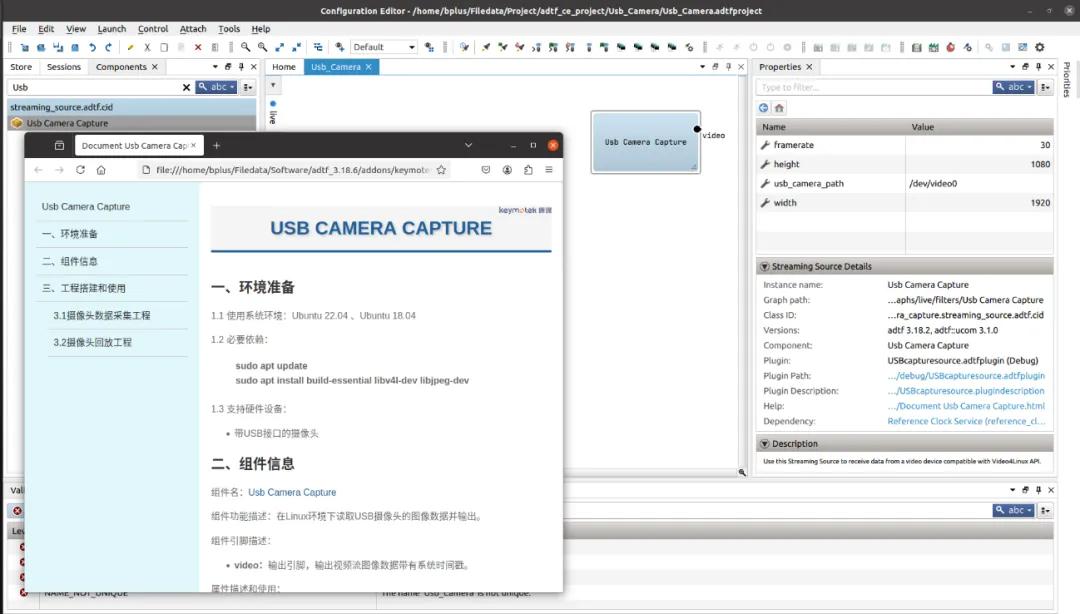

In the Streaming Source Details section on the right side of the software interface, click Help to quickly view the manual for the Usb Camera Capture component, which includes environment preparation, component information, and how to build a project case.

Figure 7: Component User's Manual

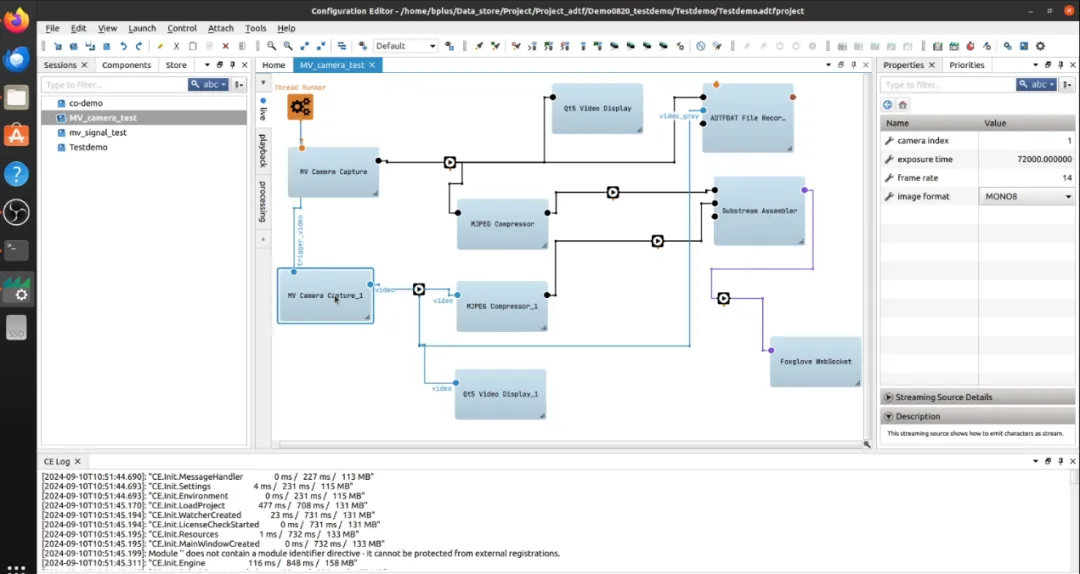

For example, in the Properties field of the MV Camera Capture element, you can view the attributes of the element's configuration, including the camera's mount node, exposure time, frame rate, and image acquisition mode. The image acquisition modes can be divided into acquisition mode and grayscale mode, MONO8 for grayscale images and RGB8 for color images.

Figure 8: 2-way camera collection project

Each MV Camera Capture element corresponds to a physical camera, enabling independent acquisition and processing of data along the way.

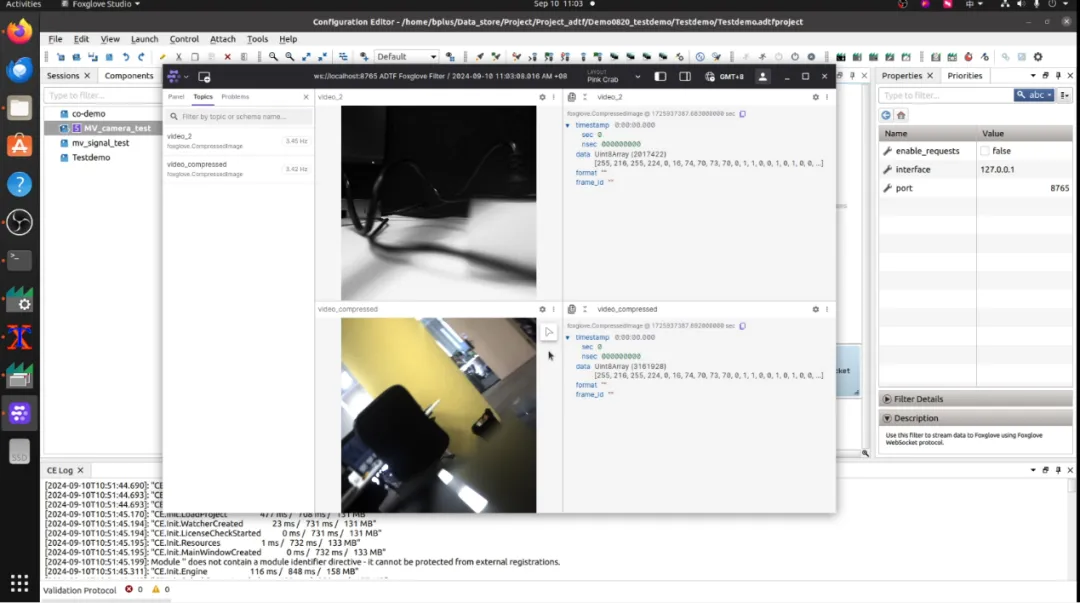

Figure 9: Operation of No. 2 Camera Harvesting Project

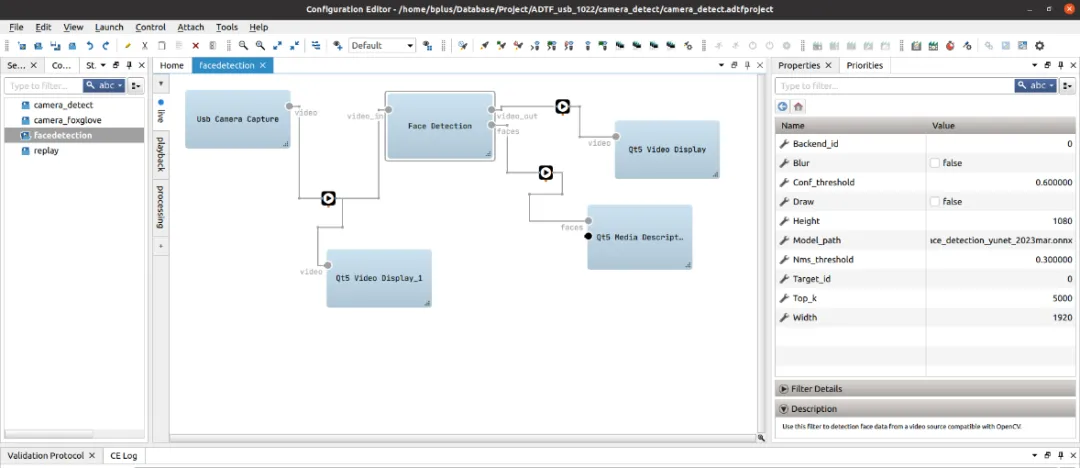

Algorithm testing can be accomplished by combining camera capture data with algorithm components. For example, by combining the Usb Camera Capture component, Face Detection component, Qt5 Video Display component, and Qt5 Meida Description Display, we can quickly set up a single camera capture and face recognition algorithm test project.

Figure 10: Face Recognition Algorithm Engineering

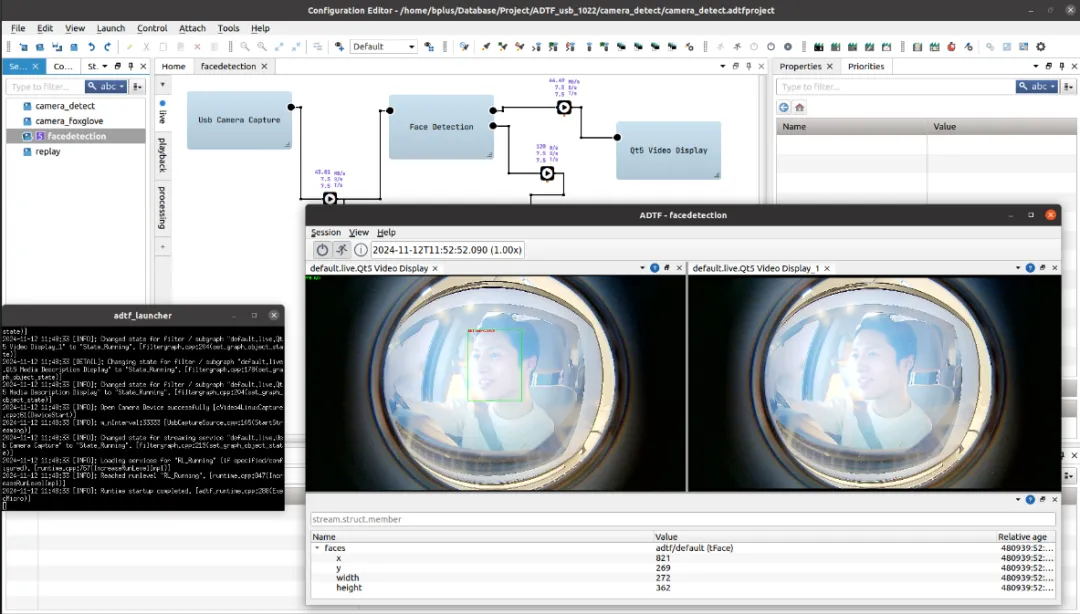

Figure 11: Facial Recognition Algorithm Engineering Runs

Conclusion

The multi-source camera data acquisition and algorithm integration test solution can dramatically improve the efficiency of image acquisition and algorithm development, allowing developers to verify functionality more quickly and shorten the development cycle. By combining Honghong's BRICKplus/BRICK2 hardware acquisition platform with the ADTF software framework, not only can we quickly integrate multi-source cameras and complete data acquisition, but we can also ensure the accuracy and timeliness of algorithm testing. In the face of evolving technology needs, we will continue to optimize this solution for more complex and diverse application scenarios.

Product Recommendation

b-plus BRICK2 ADAS Measurement Platform

- Designed for Autonomous Driving and ADAS, supports multi-sensor data synchronization.

- Simultaneous recording and analysis of camera, radar, LiDAR and other data.

- Multi-channel inputs and flexible scalability for R&D teams of different sizes

- Combined with software tools, development and testing cycles can be dramatically shortened.

BRICKplus High Performance Automatic Driving Data Acquisition and Recording Platform

- Powerful computing and storage capabilities ensure synchronization of multiple images and sensor data.

- High stability and portability for many complex road and industrial scenarios

- Supports rapid testing and deployment in the ADAS or autonomous driving space.

- Provides reliable edge computing performance and reduces the burden on back-end servers